Using ETF Internal Analytics to Identify Mean Reversion Opportunities (python)

/FOR A DEEPER DIVE INTO ETF PERFORMANCE AND RELATIVE VALUE SUBSCRIBE TO THE ETF INTERNAL ANALYTICS PACKAGE HERE

Since I started producing the following graphic for the ETF Internal Analytics product, I found the weekly return bin information compelling. I became curious about whether there was an opportunity to be exploited in the distribution patterns.

Blackarbs ETF Internal Analytics Sample for SPY

I distilled all the questions I had into two:

- Does the percentage of ETF component stocks at various return levels provide actionable information?

- Can a long-short market-neutral strategy be constructed by analyzing the relative return dispersion of each ETF's stock components?

To answer these questions I used a combination of tools/data sources including State Street's SPDR Holdings data, the Yahoo Finance API, and Python. To start I import the necessary packages.

from decimal import Decimal

dplaces = Decimal('0.0001')

import pandas as pd

from pandas.tseries.offsets import *

import pandas_datareader.data as web

import numpy as np

import matplotlib.pyplot as plt

import matplotlib as mpl

%matplotlib inline

sz = 8

mlt = 1.66

size=(mlt * sz, sz)

import seaborn as sns

sns.set_style('white', {"xtick.major.size": 2, "ytick.major.size": 2})

flatui = ["#9b59b6", "#3498db", "#95a5a6", "#e74c3c", "#34495e", "#2ecc71","#f4cae4"]

sns.set_palette(sns.color_palette(flatui,7))

from collections import OrderedDict as od

import _blk_ETF_Internals_

etfi = _blk_ETF_Internals_.etfInternals()

Below I define `all_etf_symbols` as an ordered dictionary with key, value pairs equivalent to the ETF symbol, and a list of the ETF's component stocks. For brevity's sake I omit some of the data cleanup processes I did to construct the dictionary.

all_etf_symbols = od([

('SPY',SPY), ('DIA',DIA), ('QQQ',QQQ),

('XLB',XLB), ('XLE',XLE), ('XLF',XLF),

('XLI',XLI), ('XLK',XLK), ('XLP',XLP),

('XLU',XLU), ('XLV',XLV), ('XLY',XLY),

('FTSE',FTSE), ('DAX',DAX), ('TSX',TSX), ('ASX',ASX)

])

Next I define a convenience function to get the ETF prices. I also create a list of date tuples with each member of the tuple being a date from the same week anchored to Monday and Friday. This index gets reused multiple times.

def _get_px(symbol, start, end):

return web.DataReader(symbol, 'yahoo', start, end)['Adj Close']

# ======================================================== #

# get query dates anchored to last 52 weeks Monday

start_query, end_query = etfi._get_query_dates()

# ======================================================== #

# Get ETF/Index price data for performance analytics

etf_syms = ['SPY', 'DIA', 'QQQ', 'XLB', 'XLE', \

'XLI', 'XLK','XLP', 'XLU', 'XLV', 'XLY', \

'^FTSE', '^GDAXI', '^GSPTSE', '^AXJO']

ETF_px = pd.DataFrame()

for sym in etf_syms:

ETF_px[sym] = _get_px(sym, start_query, end_query)

rets = np.log(ETF_px / ETF_px.shift(1)).dropna()

# ======================================================== #

# create list of date tuples with weekly Monday and Friday dates

today = pd.datetime.today().date()

date_str = today.strftime('%m-%d-%y')

start = today - 252 * BDay()

idx_monday = pd.bdate_range(start, today - 5 * BDay(), freq='W-MON')

idx_friday = pd.bdate_range(start, today, freq='W-FRI')

dates = []

n = 0

for ifri in idx_friday[1:]:

try:

n += 1

imon = idx_monday[n - 1]

dates.append((imon, ifri))

except: break

Next we calculate the return bins and organize them by dates. I split this process by separating the positive return bins and negative return bins. Additionally the data is indexed so that the return bin calculations we perform are available the following Monday to avoid injecting look-ahead bias into the analysis. To facilitate this portion of the analysis I define several convenience functions.

# ======================================================================= #

def _get_return_bins(ETF_symbol, symbols, location_h5, start_date, end_date):

"""Function to aggregate the size of the return bins

Parameters:

===========

ETF_symbol = str() - etf symbol being analyzed

symbols = list of ETF component symbols

location_h5 = str() - hdf5 file location which stores ETF raw price data

start_date = pd.datetime() - start date of lookback

end_date = pd.datetime()

Returns:

========

N_pos = pd.DataFrame() - with stock counts (absolute and percent)

meeting or exceeding each return threshold with

index=list of thresholds

N_neg = pd.DataFrame() - see above

"""

"""Extract Close Prices"""

px = etfi._extract_adjclose_px(location_h5, symbols)

"""Set key parameters"""

N_stocks = len(px.columns)

log_returns = etfi._calc_log_returns(px)

log_returns = log_returns.ix[start_date:end_date]

"""Calculate thresholds"""

pos_thresh = [0., .01, .02, .03, .04, .05, .06, .07, .1, .15, .2]

neg_thresh = [ flt * -1. for flt in pos_thresh ]

N_pos = _calc_return_thresholds(log_returns.resample('1W', how='sum'), pos_thresh, N_stocks, pos=True)

N_neg = _calc_return_thresholds(log_returns.resample('1W', how='sum'), neg_thresh, N_stocks)

return N_pos, N_neg

# ======================================================================= #

def _calc_return_thresholds(log_returns, list_of_thresholds,

N_stocks, pos=None):

"""Function to calculate the quantity of stocks

meeting or exceeding a return threshold

Parameters:

===========

list_of_thresholds: a list of return thresholds

log_returns: dataframe of equity returns

N_stocks = int() > 0: count of component stocks

pos = boolean, if True calculates positive thresholds else negative

Returns:

========

N_thresh = pd.DataFrame() - stock counts (absolute and percent)

meeting or exceeding each return threshold with

index=list of thresholds

"""

if pos:

N_thresh = pd.DataFrame(columns=['Count',

'Percent of Total Stocks'], index=list_of_thresholds)

for thresh in list_of_thresholds:

l = [1 for i in log_returns.ix[-1] if i >= thresh]

N_thresh.loc[thresh]['Count'] = sum(l)

N_thresh['Percent of Total Stocks'] = (N_thresh['Count'] / N_stocks)

return N_thresh

else:

N_thresh = pd.DataFrame(columns=['Count',

'Percent of Total Stocks'], index=list_of_thresholds)

for thresh in list_of_thresholds:

l = [1 for i in log_returns.ix[-1] if i <= thresh]

N_thresh.loc[thresh]['Count'] = sum(l)

N_thresh['Percent of Total Stocks'] = (N_thresh['Count'] / N_stocks)

return N_thresh

# ======================================================================= #

def _quantize(val):

return Decimal(val).quantize(dplaces)

def _format_bins(npos, nneg):

"""Function to quantize Percent of Stocks column for readability

Parameters:

===========

npos, nneg = pd.DataFrame() containing return bin data

Returns:

========

npos, nneg = reformatted pd.Series() - returns Percent of Total Stocks

"""

npos['Percent of Total Stocks'] = npos['Percent of Total Stocks'].apply(_quantize)

nneg['Percent of Total Stocks'] = nneg['Percent of Total Stocks'].apply(_quantize)

return npos, nneg

def _add_columns(df, empty_df, etf_sym):

"""Function to dynamically add columns to empty dataframe"""

empty_df[etf_sym] = df['Percent of Total Stocks']

return empty_df

"""

1. Separate data by positive(negative) return thresholds

2. Iterate through index with length = len of dates list

3. For each i (interval/date) compute the size (absolute/percent)

of return bins

4. Construct ordered dictionary using key, value pairs

equal to the Date of info availability, and the

dataframe of return bin data

"""

idx = range(len(dates))

by_date_pos = od()

by_date_neg = od()

MON, FRI = 0, 1

for i in idx:

pos_df = pd.DataFrame()

neg_df = pd.DataFrame()

try:

for etf_sym, symbols in all_etf_symbols.items():

start_date = dates[i][MON]

end_date = dates[i][FRI]

location = price_path + r'{}\{} ETF Market Internals

Price Data {}.h5'.format(date_str, etf_sym, date_str)

npos, nneg = _get_return_bins(etf_sym, symbols,

location, start_date, end_date)

npos, nneg = _format_bins(npos, nneg)

pos_df = _add_columns(npos, pos_df, etf_sym)

neg_df = _add_columns(nneg, neg_df, etf_sym)

# The return bin information from previous week

is available the next monday

by_date_pos[dates[i+1][MON]] = pos_df

by_date_neg[dates[i+1][MON]] = neg_df

except: break

Here's a sample output after running the above script. Using the below example focusing in on the SPY column, the data would be interpreted as, on Monday 4/20/2016, ~17% of SPY's component stocks had gained 1% or more over the previous week.

Now we calculate and group each ETF's weekly returns by date. Like before we index the data such that previous week's return data is available the following Monday. I define two convenience functions to help. The first function simply returns the log returns over the selected period. The second function converts between Yahoo Finance symbols and the symbols used for this study.

# ======================================================================= #

def _weekly_returns(returns, start_date, end_date):

"""Function to compute sum of log returns over some period

Parameters:

===========

returns = pd.DataFrame() - ETF returns

start_date = pd.datetime()

end_date = pd.datetime()

Returns:

========

by_week_returns = pd.Series() - index = ETF symbols,

data = sum() of previous week's returns

"""

selected_returns = returns.ix[start_date:end_date]

by_week_returns = selected_returns.sum()

return by_week_returns

# ======================================================================= #

def _convert_symbol(symbol, reverse=None):

'''Function to convert foreign index symbol to yahoo finance symbol'''

if not reverse:

compatible_syms = ['^FTSE', '^GDAXI', '^GSPTSE', '^AXJO']

incompatible_syms = ['FTSE','DAX','TSX','ASX']

compatible_symbol = None

# iterate to check if any symbols are incompatible

for inc in incompatible_syms:

if symbol == inc:

idx_loc = incompatible_syms.index(inc)

compatible_symbol = compatible_syms[idx_loc]

return compatible_symbol

# if compatible_symbol is None then symbol is good; return it

if not compatible_symbol:

return symbol

elif reverse:

compatible_syms = ['FTSE','DAX','TSX','ASX']

incompatible_syms = ['^FTSE', '^GDAXI', '^GSPTSE', '^AXJO']

compatible_symbol = None

# iterate to check if any symbols are incompatible

for inc in incompatible_syms:

if symbol == inc:

idx_loc = incompatible_syms.index(inc)

compatible_symbol = compatible_syms[idx_loc]

return compatible_symbol

# if compatible_symbol is None then symbol is good; return it

if not compatible_symbol:

return symbol

# ======================================================================= #

"""

1. Create ordered dict to hold weekly return data organized by date

"""

by_date_returns = od()

idx = range(len(dates))

for n in idx:

try:

start_date = dates[n][MON]

end_date = dates[n][FRI]

tmp_rets = _weekly_returns(rets, start_date, end_date)

idx = []

for i in tmp_rets.index:

sec = _convert_symbol(i, reverse=True)

idx.append(sec)

tmp_rets.index = idx

# Previous week's return data will be available the following monday

by_date_returns[dates[n+1][MON]] = tmp_rets

except: break

Here's a sample output of the `by_date_returns`.

Next, I iterate over each return threshold partitioning the ETF's percent of total stocks greater than the threshold into two groups. Groups assigned '1' have a percentage in the top half of results and those assigned '0' have a percentage in the bottom half.

"""

Get time series of returns for Symbols in the top half of return distribution

1. Select positive(negative) return bins

2. Group by Percent Total of return bin distribution

3. Select group

4. Examine next week's returns

5. Store results

"""

# POSITIVE THRESHOLDS

# ======================================================================= #

# Equally divide return bin counts(percentages) using pd.qcut()

pos_thresh = [0., .01, .02, .03, .04, .05, .06, .07, .1, .15, .2]

pbt_dict = od() # agg pos_bin_dict by return thresholds

for thresh in pos_thresh:

try:

pos_bin_dict = {}

for k, v in by_date_pos.items():

tmp = pd.DataFrame()

tmp['Percent_Total'] = v.loc[thresh].astype(float)

tmp['Halves'] = pd.qcut(tmp['Percent_Total'], 2, labels=False)

pos_bin_dict[k] = tmp.sort_values('Percent_Total',

ascending=False)

except: pass

pbt_dict[thresh] = pos_bin_dict

Sample output of `pbt_dict` output.

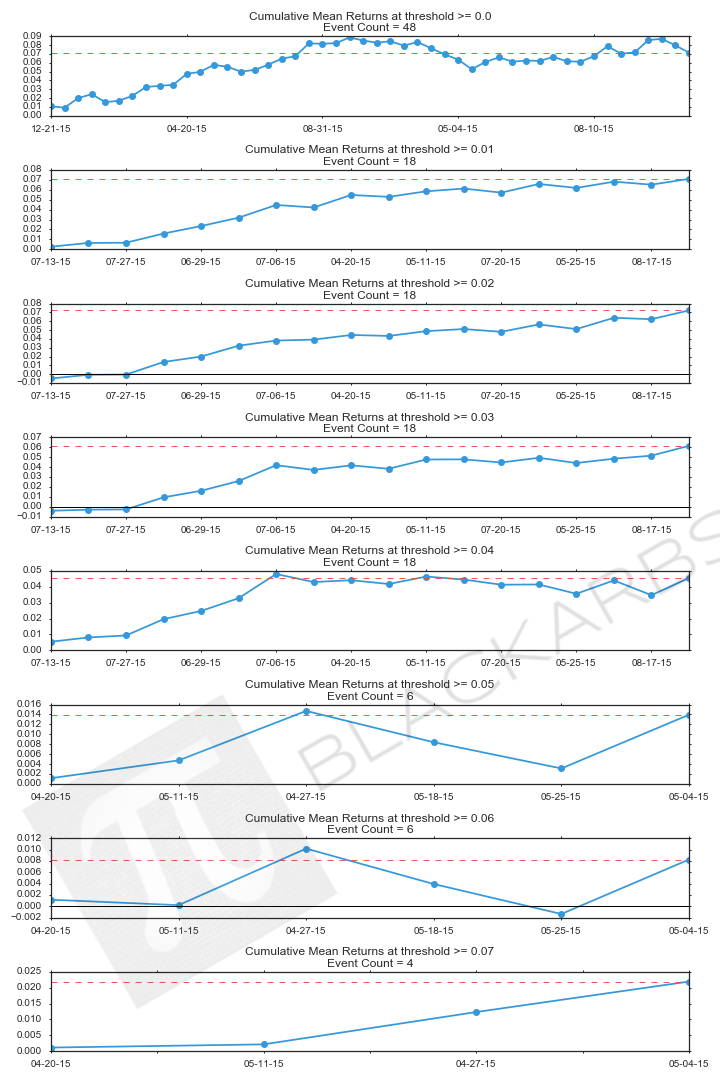

Now I calculate a theoretical holding period return of one week. To test whether mean reversion is a tradeable opportunity, this return assumes you long the bottom half of stocks i.e., the stocks with lowest percentage of components exceeding a return threshold, while shorting the top half of stocks i.e., the stocks with the highest percentage of components exceeding a return threshold. Afterwards I print out the average Long - Short return and the cumulative return for each return threshold.

# ======================================================================= #

# at each threshold calculate cumulative returns by return bin

HPR = {} # hpr by thresh

for thresh in pbt_dict.keys():

hpr_long = pd.Series() # long portfolio holding period rets

hpr_short = pd.Series()

LS = pd.Series()

for k, v in pbt_dict[thresh].items():

tgt_date = pd.to_datetime(k)

# select half and get symbols

top_q_syms = list(v[v['Halves']==1].index) # short top group

bottom_q_syms = list(v[v['Halves']==0].index) # long bottom group

try:

for i, n in enumerate(dates):

if n[MON] == tgt_date: # get index location of date

next_monday_close = dates[i + 1][MON]

except: continue

hpr_long.loc[tgt_date.strftime('%m-%d-%y')] = by_date_returns[next_monday_close][bottom_q_syms].mean()

hpr_short.loc[tgt_date.strftime('%m-%d-%y')] = by_date_returns[next_monday_close][top_q_syms].mean()

long = hpr_long.loc[tgt_date.strftime('%m-%d-%y')]

short = hpr_short.loc[tgt_date.strftime('%m-%d-%y')]

LS.loc[tgt_date.strftime('%m-%d-%y')] = long - short

HPR[thresh] = LS

# ======================================================================= #

for thresh in fkeys:

try:

print('positive threshold: {:3.2%} | mean return: {:3.2%} |

sum: {:3.2%}'.format(thresh, Decimal(HPR[thresh].mean()).quantize(dplaces),

Decimal(HPR[thresh].sum()).quantize(dplaces)))

except: pass

We can see some interesting results already. Now we do the same analysis for the negative return thresholds. This time we take a theoretical long position in the ETF's with the highest percentage of their component stocks falling below a specified threshold while shorting the ETF's with the lowest percentage of their component stocks falling below the threshold.

# NEGATIVE THRESHOLDS

# ======================================================================= #

# Equally divide return bin counts(percentages) using pd.qcut()

pos_thresh = [0., .01, .02, .03, .04, .05, .06, .07, .1, .15, .2]

neg_thresh = [ flt * -1. for flt in pos_thresh ]

nbt_dict = {} # agg pos_bin_dict by return thresholds

for thresh in neg_thresh:

try:

neg_bin_dict = {}

for k, v in by_date_neg.items():

tmp = pd.DataFrame()

tmp['Percent_Total'] = v.loc[thresh].astype(float)

tmp['Halves'] = pd.qcut(tmp['Percent_Total'], 2, labels=False)

neg_bin_dict[k] = tmp.sort_values('Percent_Total', ascending=False)

except: pass

nbt_dict[thresh] = neg_bin_dict

# ======================================================================= #

HPR_n = {} # hpr by thresh

for thresh in nbt_dict.keys():

hpr_long = pd.Series() # long portfolio holding period rets

hpr_short = pd.Series()

LS = pd.Series()

for k, v in nbt_dict[thresh].items():

tgt_date = pd.to_datetime(k)

# select halves and get symbols

top_q_syms = list(v[v['Halves']==1].index)

bottom_q_syms = list(v[v['Halves']==0].index)

try:

for i, n in enumerate(dates):

if n[MON] == tgt_date: # get location of date

next_monday_close = dates[i + 1][MON]

except: continue

hpr_long.loc[tgt_date.strftime('%m-%d-%y')] = by_date_returns[next_monday_close][top_q_syms].mean()

hpr_short.loc[tgt_date.strftime('%m-%d-%y')] = by_date_returns[next_monday_close][bottom_q_syms].mean()

long = hpr_long.loc[tgt_date.strftime('%m-%d-%y')]

short = hpr_short.loc[tgt_date.strftime('%m-%d-%y')]

LS.loc[tgt_date.strftime('%m-%d-%y')] = long - short

HPR_n[thresh] = LS

# ======================================================================= #

for thresh in sorted(list(nbt_dict.keys()), reverse=True):

if len(HPR_n[thresh])>1:

try:

print('negative threshold: {:3.2%} | mean return: {:3.2%} |

sum: {:3.2%}'.format(thresh,

Decimal(HPR_n[thresh].mean()).quantize(dplaces),

Decimal(HPR_n[thresh].sum()).quantize(dplaces)))

except: pass

Again we see some interesting results. On an event basis the average return for the negative mean reversion strategy is lower than the positive strategy, however, the cumulative sums of the returns are slightly higher. Let's review the cumulative return plots before drawing any conclusions.

POsitive mean reversion strategy returns

Negative mean reversion strategy returns

conclusions

By examining the percentage of ETF component stocks meeting or exceeded a return threshold and comparing the output across ETFs this analysis shows that there are short term mean reversion opportunities. Using positive return thresholds, the strategy calls for going long the ETFs with the lowest percentage of component stocks exceeding a return level and shorting the ETFs with the highest percentage of component stocks exceeding the level. Using the negative return thresholds, the strategy calls for going long the ETFs which the highest percentage of component stocks falling below the return level and going short the ETFs with the lowest percentage of component stocks falling below the return level. There are several details requiring further research but the most important is whether the average return per event(trade) is enough to overcome transaction costs and the bid/ask spread.